Welcome to PyTorch Tutorials¶

New to PyTorch?

The 60 min blitz is the most common starting point and provides a broad view on how to use PyTorch. It covers the basics all to the way constructing deep neural networks.

Start 60-min blitzDeep Learning with PyTorch: A 60 Minute Blitz

Understand PyTorch’s Tensor library and neural networks at a high level.

Learning PyTorch with Examples

This tutorial introduces the fundamental concepts of PyTorch through self-contained examples.

What is torch.nn really?

Use torch.nn to create and train a neural network.

Visualizing Models, Data, and Training with Tensorboard

Learn to use TensorBoard to visualize data and model training.

TorchVision Object Detection Finetuning Tutorial

Finetune a pre-trained Mask R-CNN model.

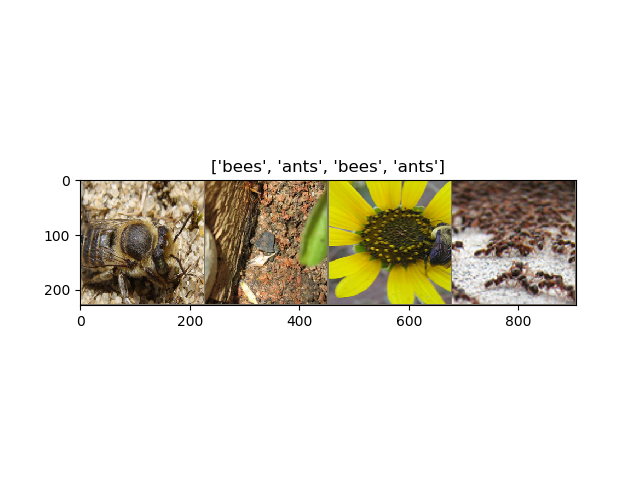

Transfer Learning for Computer Vision Tutorial

Train a convolutional neural network for image classification using transfer learning.

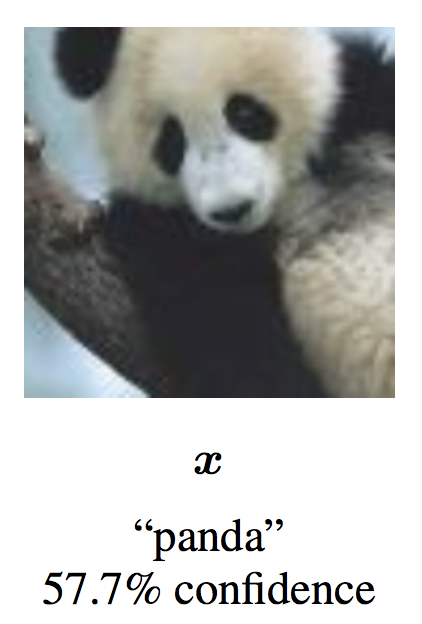

Adversarial Example Generation

Train a convolutional neural network for image classification using transfer learning.

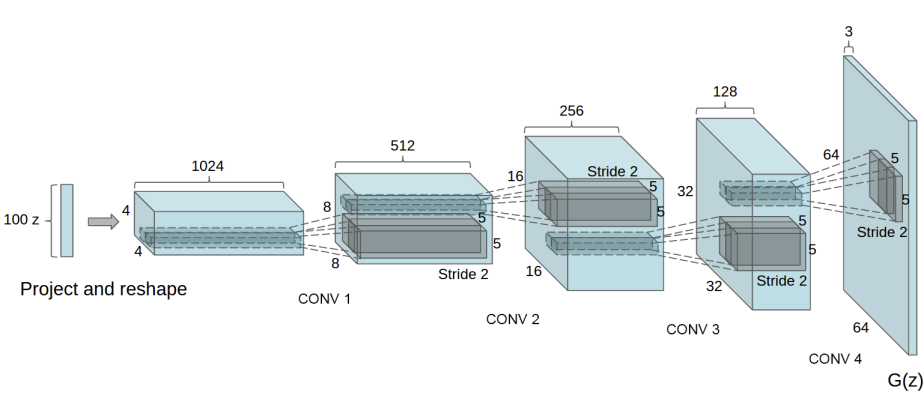

DCGAN Tutorial

Train a generative adversarial network (GAN) to generate new celebrities.

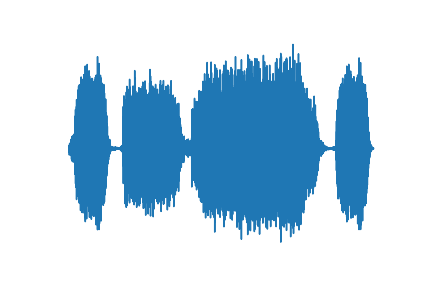

torchaudio Tutorial

Learn to load and preprocess data from a simple dataset with PyTorch's torchaudio library.

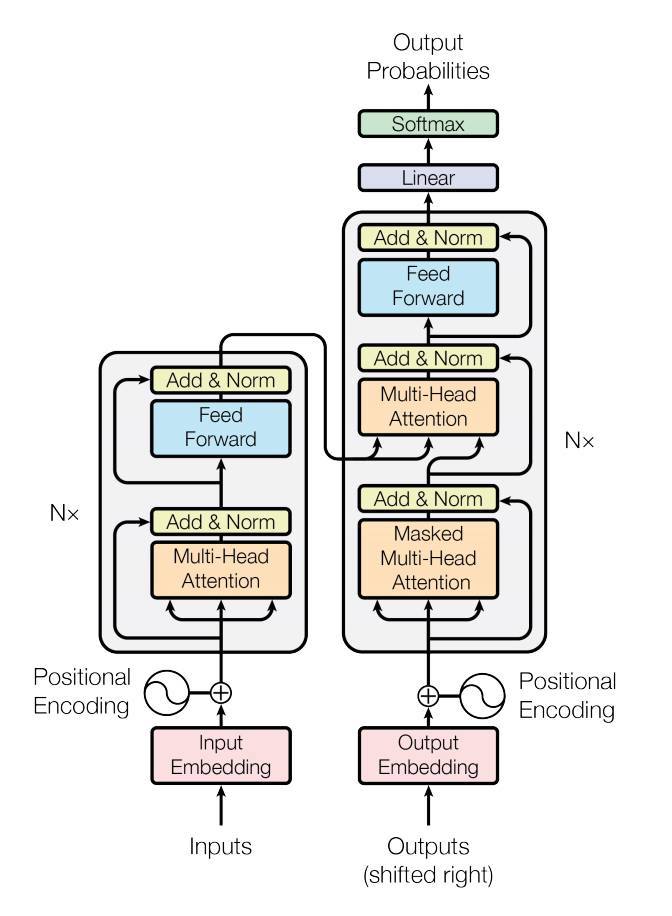

Sequence-to-Sequence Modeling wiht nn.Transformer and torchtext

Learn how to train a sequence-to-sequence model that uses the nn.Transformer module.

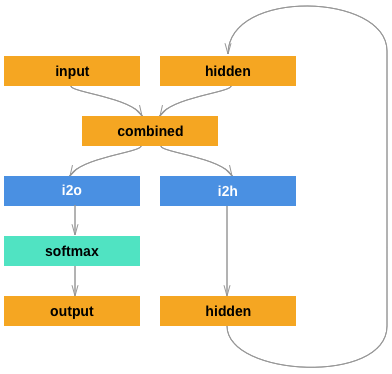

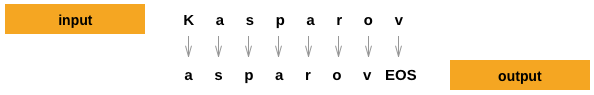

NLP from Scratch: Classifying Names with a Character-level RNN

Build and train a basic character-level RNN to classify word from scratch without the use of torchtext. First in a series of three tutorials.

NLP from Scratch: Generating Names with a Character-level RNN

After using character-level RNN to classify names, leanr how to generate names from languages. Second in a series of three tutorials.

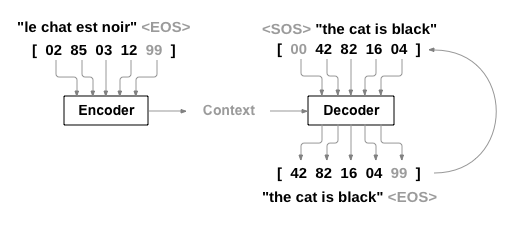

NLP from Scratch: Translation with a Sequence-to-sequence Network and Attention

This is the third and final tutorial on doing “NLP From Scratch”, where we write our own classes and functions to preprocess the data to do our NLP modeling tasks.

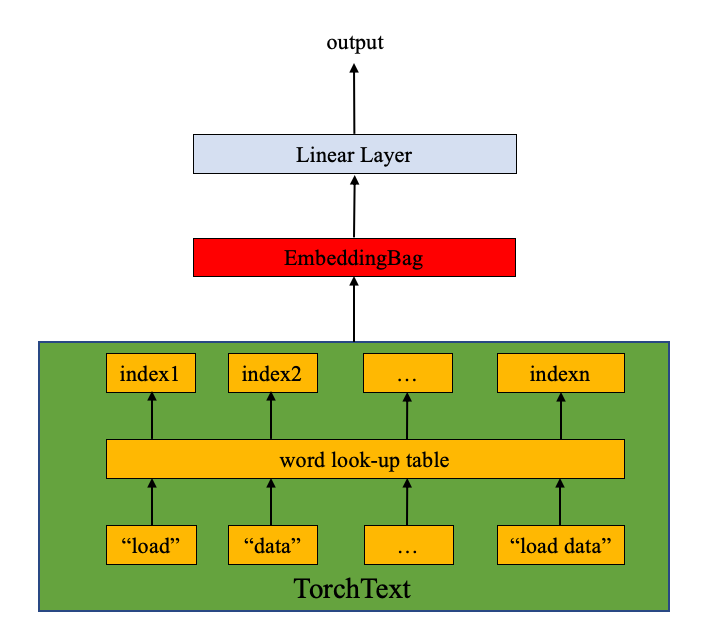

Text Classification with Torchtext

This is the third and final tutorial on doing “NLP From Scratch”, where we write our own classes and functions to preprocess the data to do our NLP modeling tasks.

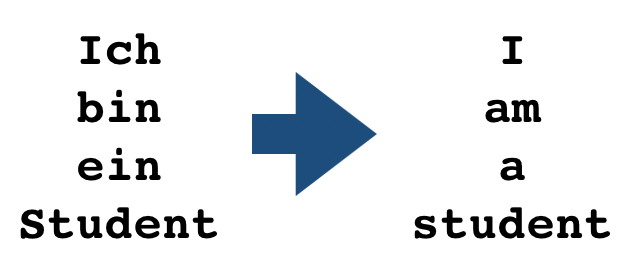

Language Translation with Torchtext

Use torchtext to reprocess data from a well-known datasets containing both English and German. Then use it to train a sequence-to-sequence model.

Reinforcement Learning (DQN)

Learn how to use PyTorch to train a Deep Q Learning (DQN) agent on the CartPole-v0 task from the OpenAI Gym.

Deploying PyTorch in Python via a REST API with Flask

Deploy a PyTorch model using Flask and expose a REST API for model inference using the example of a pretrained DenseNet 121 model which detects the image.

Introduction to TorchScript

Introduction to TorchScript, an intermediate representation of a PyTorch model (subclass of nn.Module) that can then be run in a high-performance environment such as C++.

Loading a TorchScript Model in C++

Learn how PyTorch provides to go from an existing Python model to a serialized representation that can be loaded and executed purely from C++, with no dependency on Python.

(optional) Exporting a Model from PyTorch to ONNX and Running it using ONNX Runtime

Convert a model defined in PyTorch into the ONNX format and then run it with ONNX Runtime.

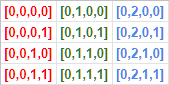

(experimental) Introduction to Named Tensors in PyTorch

Learn how to use PyTorch to train a Deep Q Learning (DQN) agent on the CartPole-v0 task from the OpenAI Gym.

(experimental) Channels Last Memory Format in PyTorch

Get an overview of Channels Last memory format and understand how it is used to order NCHW tensors in memory preserving dimensions.

Using the PyTorch C++ Frontend

Walk through an end-to-end example of training a model with the C++ frontend by training a DCGAN – a kind of generative model – to generate images of MNIST digits.

Custom C++ and CUDA Extensions

Create a neural network layer with no parameters using numpy. Then use scipy to create a neural network layer that has learnable weights.

Extending TorchScript with Custom C++ Operators

Implement a custom TorchScript operator in C++, how to build it into a shared library, how to use it in Python to define TorchScript models and lastly how to load it into a C++ application for inference workloads.

Extending TorchScript with Custom C++ Classes

This is a continuation of the custom operator tutorial, and introduces the API we’ve built for binding C++ classes into TorchScript and Python simultaneously.

Autograd in C++ Frontend

The autograd package helps build flexible and dynamic nerural netorks. In this tutorial, exploreseveral examples of doing autograd in PyTorch C++ frontend

Pruning Tutorial

Learn how to use torch.nn.utils.prune to sparsify your neural networks, and how to extend it to implement your own custom pruning technique.

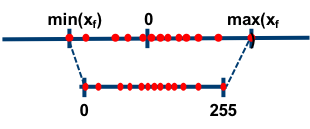

(experimental) Dynamic Quantization on an LSTM Word Language Model

Apply dynamic quantization, the easiest form of quantization, to a LSTM-based next word prediction model.

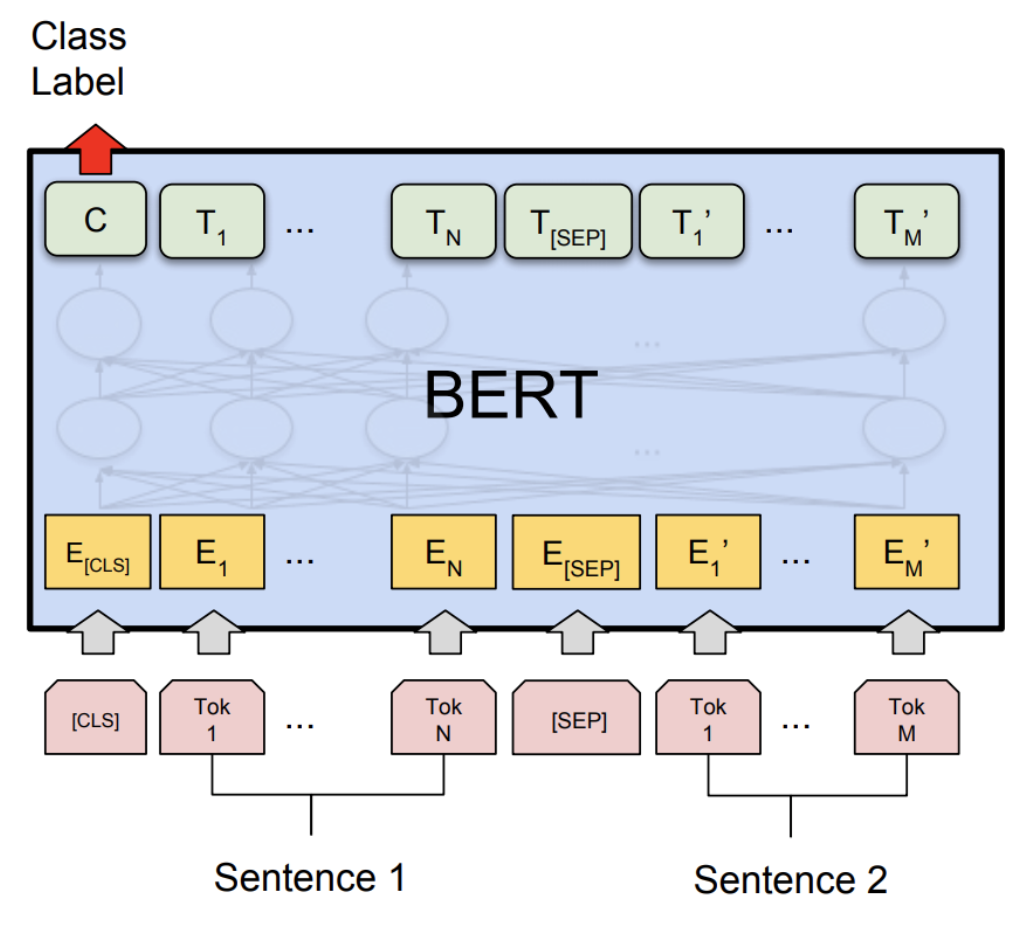

(experimental) Dynamic Quantization on BERT

Apply the dynamic quantization on a BERT (Bidirectional Embedding Representations from Transformers) model.

(experimental) Static Quantization with Eager Mode in PyTorch

Learn techniques to impove a model's accuracy = post-training static quantization, per-channel quantization, and quantization-aware training.

(experimental) Quantized Transfer Learning for Computer Vision Tutorial

Learn techniques to impove a model's accuracy - post-training static quantization, per-channel quantization, and quantization-aware training.

Single-Machine Model Parallel Best Practices

Learn how to implement model parallel, a distributed training technique which splits a single model onto different GPUs, rather than replicating the entire model on each GPU

Getting Started with Distributed Data Parallel

Learn the basics of when to use distributed data paralle versus data parallel and work through an example to set it up.

Writing Distributed Applications with PyTorch

Set up the distributed package of PyTorch, use the different communication strategies, and go over some the internals of the package.

Getting Started with Distributed RPC Framework

Learn how to build distributed training using the torch.distributed.rpc package.

(advanced) PyTorch 1.0 Distributed Trainer with Amazon AWS

Set up the distributed package of PyTorch, use the different communication strategies, and go over some the internals of the package.

Implementing a Parameter Server Using Distributed RPC Framework

Walk through a through a simple example of implementing a parameter server using PyTorch’s Distributed RPC framework.

Additional Resources¶

Examples of PyTorch

A set of examples around pytorch in Vision, Text, Reinforcement Learning, etc.

Check Them Out